UTF-8 why specify length in the first byte?

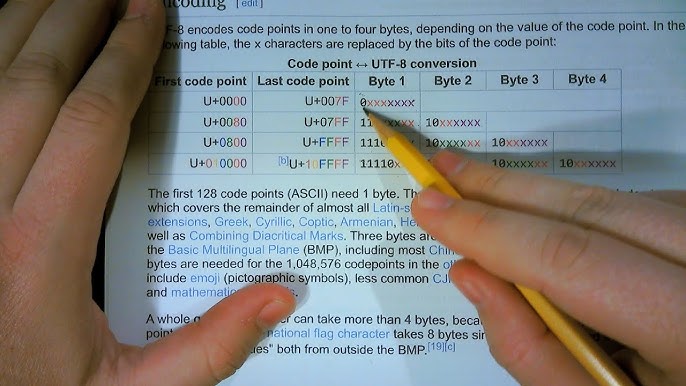

I've stumbled across this video which explains how UTF-8 encoding works really well but there is one thing I don't quite understand about the encoding of non ASCII characters. If I understood correctly these characters can consist of 1-4 bytes. The first byte has to start with 10, 110, 1110 or 11110 for a length of 1, 2, 3 or 4 bytes. The following byte(s) (of the same character) must start with 10. This makes sense but seems very wasteful to me. If instead the first byte of every character were to begin with 11 and following bytes (of the same charater) begin with 10 it would always be clear wether a byte is at the start of a character or not. Also in that way 4 byes would be able to encode 224 symbols instead of 221. The only benefit of the first method I can think of is that it is faster to count to or index at a certain character in a string as only the first byte of each character needs to be read. Are there any other benefits over or problems with the second system?

submitted by /u/zz9873

[link] [comments]